BibTeX

@article{delatorre2023llmr,

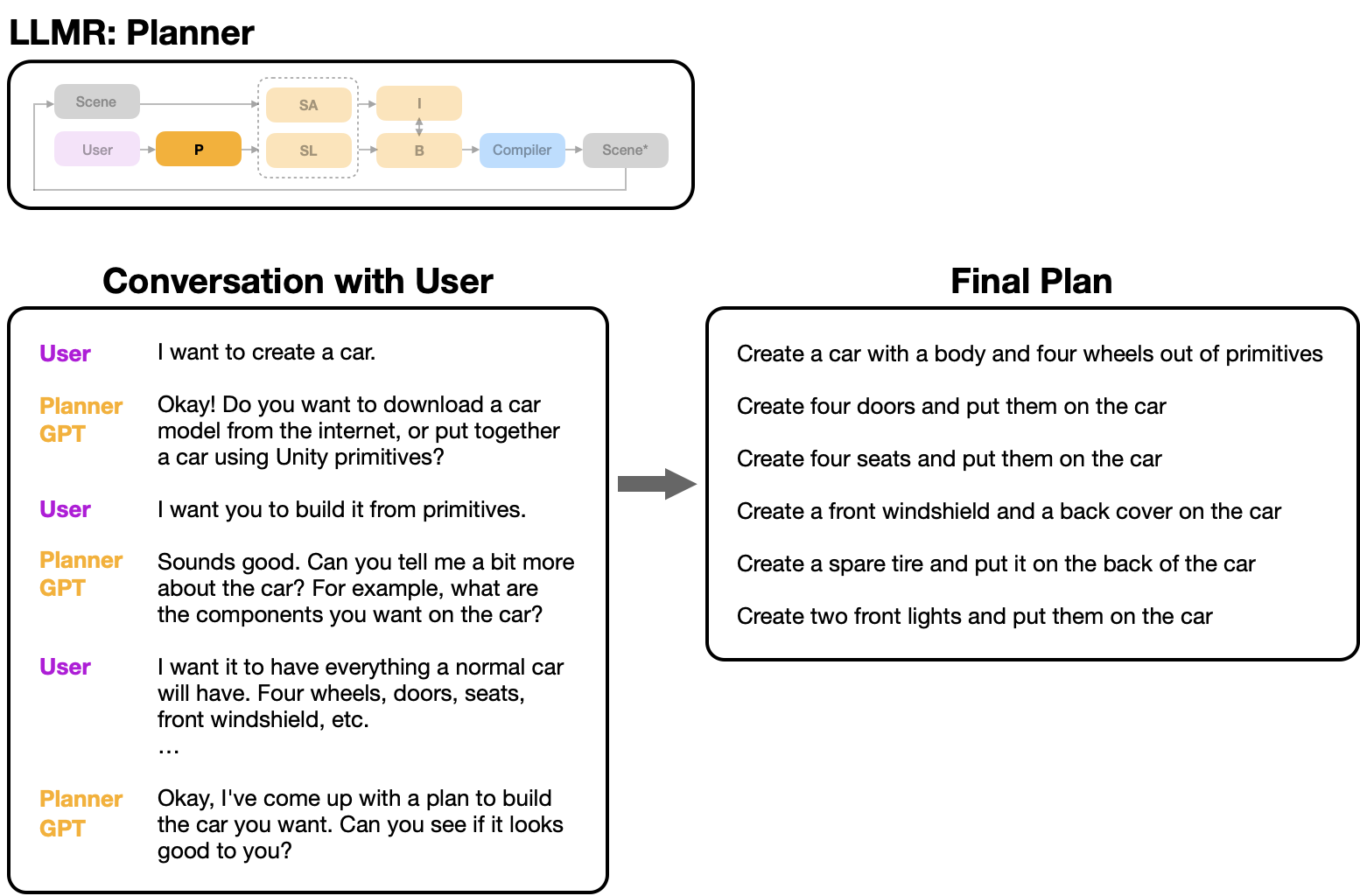

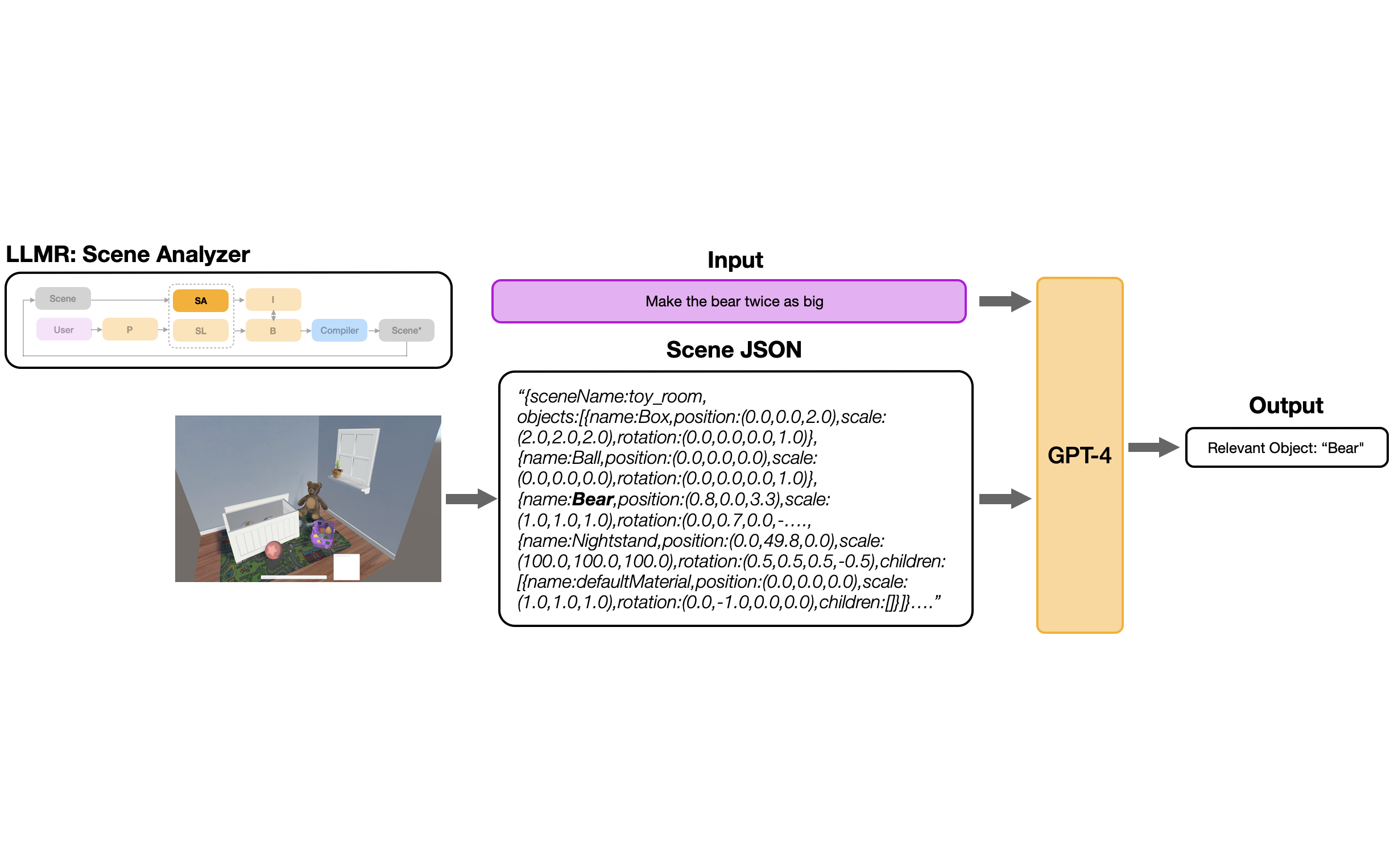

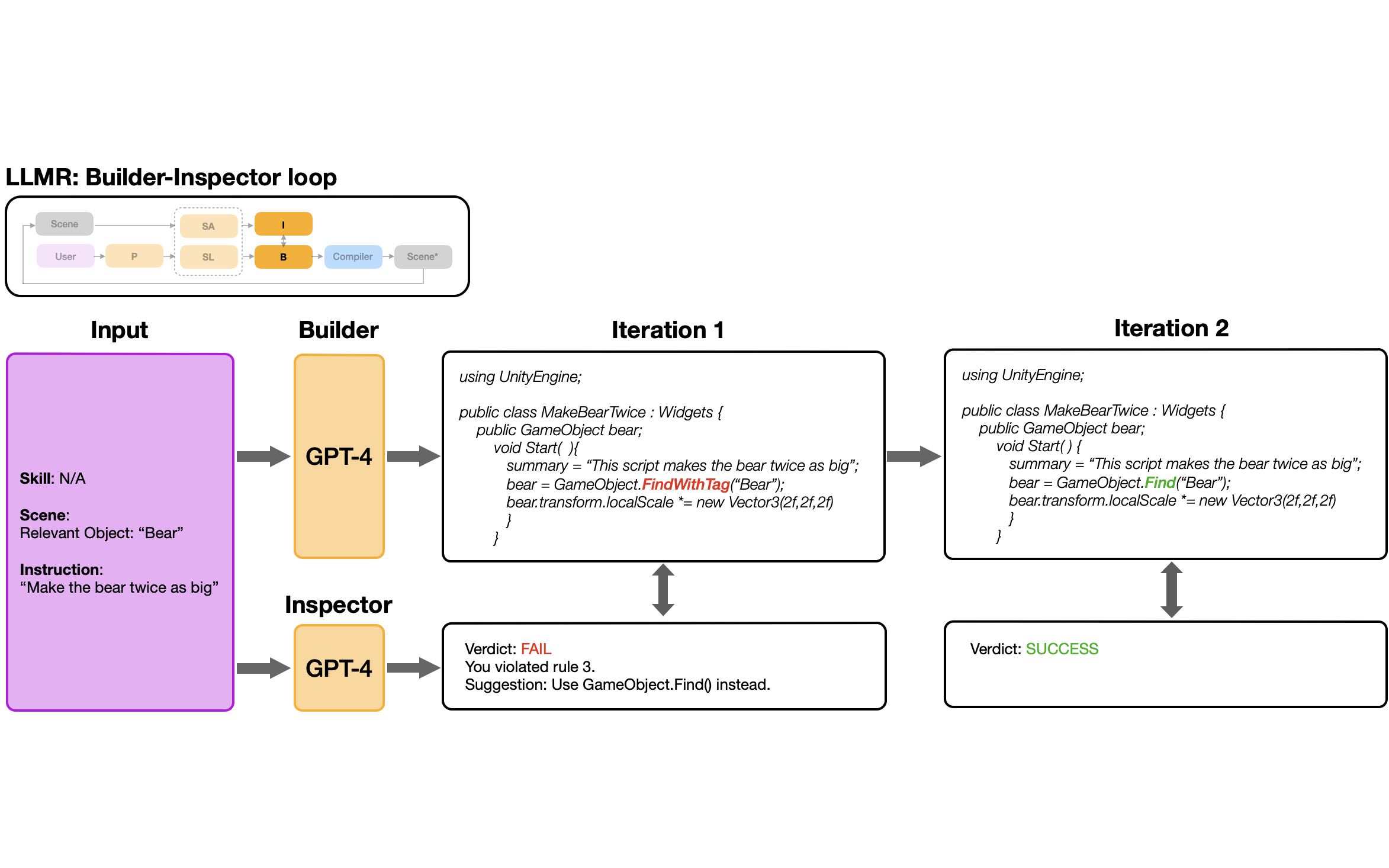

title={LLMR: Real-time Prompting of Interactive Worlds using Large Language Models},

author={Fernanda De La Torre and Cathy Mengying Fang and Han Huang and Andrzej Banburski-Fahey and Judith Amores Fernandez and Jaron Lanier},

year={2023},

eprint={2309.12276},

archivePrefix={arXiv},

primaryClass={cs.HC}

}

@article{huang2023real,

title={Real-time Animation Generation and Control on Rigged Models via Large Language Models},

author={Huang, Han and De La Torre, Fernanda and Fang, Cathy Mengying and Banburski-Fahey, Andrzej and Amores, Judith and Lanier, Jaron},

journal={arXiv preprint arXiv:2310.17838},

year={2023}

}